TensorFlow-Embedding笔记

embedding中牢记feature_batch中的value表示的都是embedding矩阵中的index

1. tf 1.x中的Embedding实现

使用embedding_lookup函数来实现Emedding,如下:

1 | # embedding matrix 4x4 |

代码运行结果:

1 | embedding1 |

2. tf 1.x中与Embedding类似操作 - 单维索引

1 | # 单维索引 |

代码运行结果:

1 | gather_a |

3. tf 1.x中与Embedding类似操作 - 多维索引

1 | # 多维索引 |

代码运行结果:

1 | tf.gather_nd(a, index_a) |

4. tf 1.x中与Embedding类似操作 - 稀疏表示的Embedding

1 | # sparse embedding 稀疏表示的embedding |

代码运行结果:

1 | a |

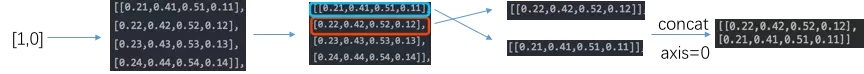

5. tf 2.0中Embedding实现

在tf2.0中,embedding同样可以通过embedding_lookup来实现,不过不同的是,我们不需要通过sess.run来获取结果了,可以直接运行结果,并转换为numpy。

1 | embedding = tf.constant( |

代码运行结果:

1 | embedding1 |

神经网络中使用embedding层,推荐使用Keras:

1 | from tensorflow.keras import layers |